mastering Colab

tl;dr: How to use and productionize Google Colab, a free Jupyter Notebook service

cost: $0

build time: 15 minutes (MVP)

Google Colaboratory (or just Colab) is a recently popular cloud-ish implementation of Jupyter Notebooks.

In addition to Jupyter features you're familiar with - cell based execution, shared state across cells, and visualization - Colab offers easy GDrive file I/O, a cleaner UI, and some native options for versioning.

Below, I'll cover a few of the ways to get the most out of Colab

- magic operators (! and %)

- importing and exporting files to your Google Drive

- reading and writing data to your Google Sheets

- forms UX

- versioning to Github

All the code covered in this article is implemented in this Colab Notebook. Feel free to make a copy and play around with it

#1 - magic operators

set environment variables (it's generally best to execute the cell, delete the secret_key value, and CMD+S to prevent the key value from being stored inadvertently)

%env SECRET=1234567890

import less common dependencies (e.g. googleauth). Most common libraries are included, including beyond the standard Python lib (pandas, requests, etc).

!pip install googleauth

(for a full list of out-of-the-box libraries, use:)

!pip list

list files in local storage:

!dir

#2 - GDrive I/O

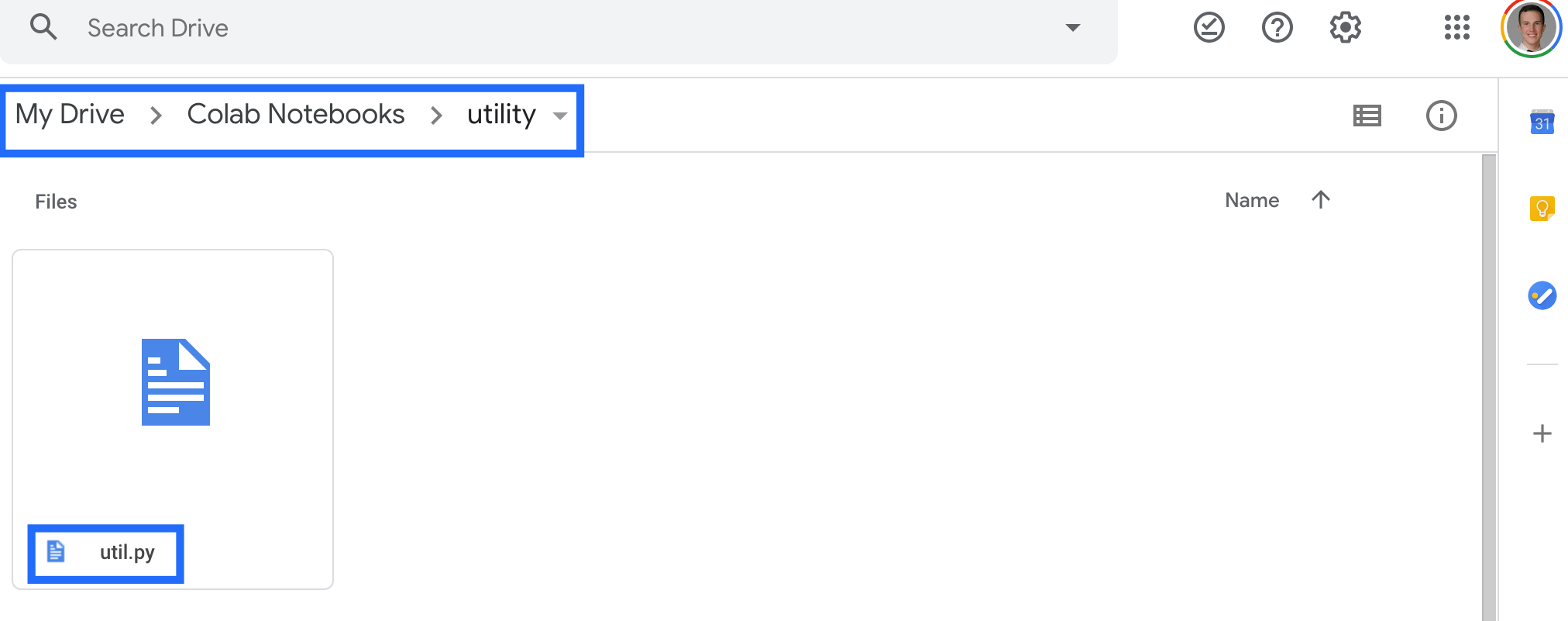

Writing a bunch of notebooks and find yourself using the same helper functions over and over? Store a .py file in GDrive and import it as a library.

from os.path import join

from google.colab import drive

from importlib.machinery import SourceFileLoader

ROOT = "/content/drive"

PROJ = "My Drive/Colab Notebooks" # Where your Colabs live

drive.mount(ROOT, force_remount=False)

util = SourceFileLoader('util', join(ROOT, PROJ, 'utility/util.py')).load_module()

from util import some_function

You can use the same mechanism to read other types of data, like CSVs.

from os.path import join

import pandas as pd

df = pd.read_csv(join(ROOT, "My Drive", "Some_Spreadsheet.csv"))

Writes are just as easy - use the drive filesystem as if it were your local directory.

with open(join(ROOT, "My Drive","text_file.txt"), "w") as file:

file.write("foo bar baz")

You can add this snippet at the bottom of a cell to automatically download file(s) (e.g. after they've finished writing)

from google.colab import files

files.download('text_file.txt')

#3 - GSpread reads and writes

Colab allows you to use your existing Google Auth to access your Google Sheets, as well.

First, lets set up a couple helper functions

from google.colab import auth

from oauth2client.client import GoogleCredentials

import gspread

import gspread_dataframe as gd

import pandas as pd

def get_gsheet_tab(sh, tab_name, **kwargs):

if isinstance(tab_name, int): # index

tab = sh.get_worksheet(tab_name)

elif isinstance(tab_name, str):

tab = sh.worksheet(tab_name)

# This avoids a specific bug around the tab being empty

try:

tab_lod = tab.get_all_records(default_blank=None)

except IndexError:

tab_lod = []

return tab_lod, tab

def get_gsheet_sheet(sheet_name):

auth.authenticate_user()

gc = gspread.authorize(GoogleCredentials.get_application_default())

if 'docs.google.com' in sheet_name:

sh = gc.open_by_url(sheet_name)

elif len(sheet_name) == 44:

sh = gc.open_by_key(sheet_name)

else: # File name

sh = gc.open(sheet_name)

return sh

Writes are straightforward. I prefer to write DataFrames to Google Sheets; if you don't want to use pandas, you can use GSpread's native .update_cells

def write_to_gsheet(df_to_write, sheet_name, tab_name):

sh = get_gsheet_sheet(sheet_name)

tab_lod, ws = get_gsheet_tab(sh, tab_name)

gd.set_with_dataframe(ws, df_to_write)

print("Written successfully")

(Converting most data structures to df is straightforward)

import pandas as pd

df = pd.read_json(input_file, orient="records") # JSON

df = pd.DataFrame(input_file) # list of dicts or list of lists

df = pd.DataFrame.from_dict(input_file) # dict with list values

Reads are even easier and return a handy list of dictionaries:

def read_from_gsheet(sheet_name, tab_name):

sh = get_gsheet_sheet(sheet_name)

tab_lod, ws = get_gsheet_tab(sh, tab_name)

return tab_lod

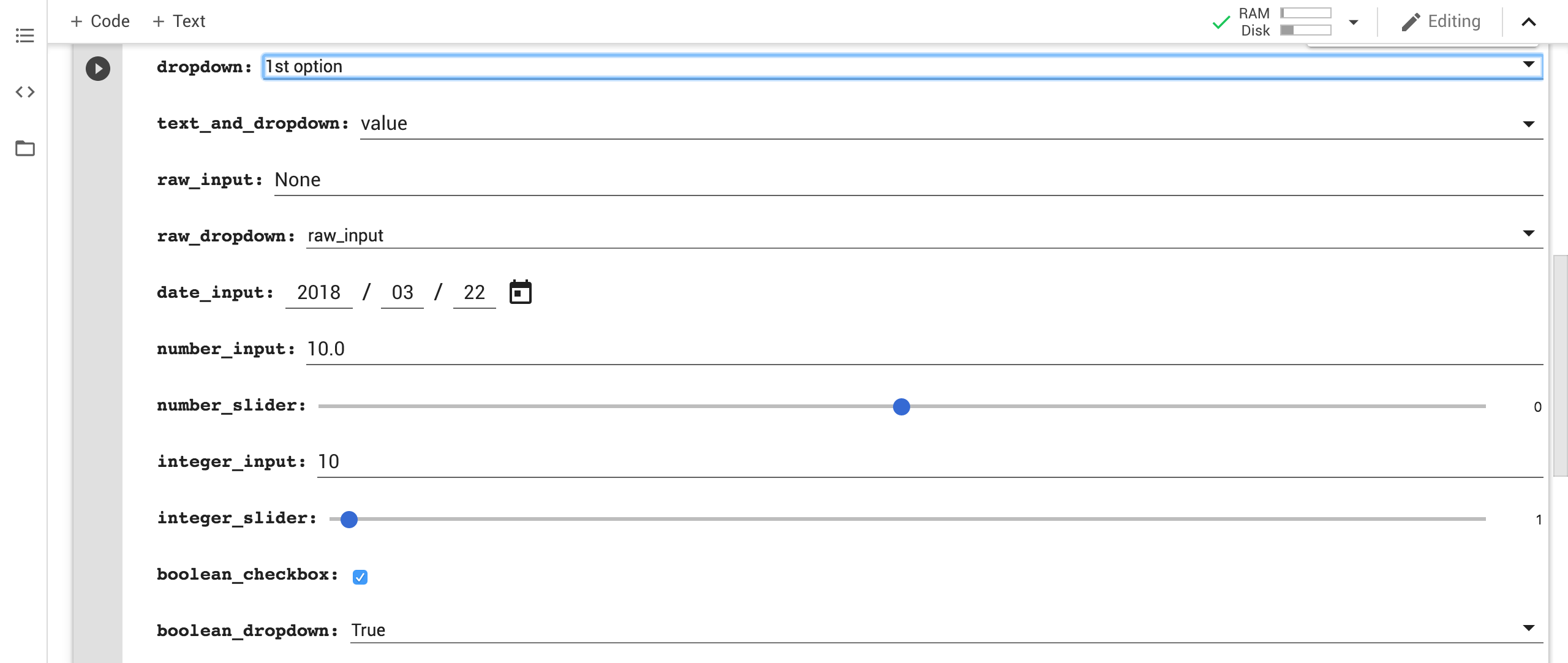

#4 - forms

Colab allows you to make a user interface over your Jupyter Notebook, so you can easily change parameters or share it with nontechnical users. A few of the more useful ones (sandbox example here):

text = 'value' #@param {type:"string"}

dropdown = '1st option' #@param ["1st option", "2nd option", "3rd option"]

text_and_dropdown = 'value' #@param ["1st option", "2nd option", "3rd option"] {allow-input: true}

raw_input = None #@param {type:"raw"}

raw_dropdown = raw_input #@param [1, "raw_input", "False", "'string'"] {type:"raw"}

date_input = '2018-03-22' #@param {type:"date"}

number_input = 10.0 #@param {type:"number"}

number_slider = 0 #@param {type:"slider", min:-1, max:1, step:0.1}

integer_input = 10 #@param {type:"integer"}

integer_slider = 1 #@param {type:"slider", min:0, max:100, step:1}

boolean_checkbox = True #@param {type:"boolean"}

boolean_dropdown = True #@param ["False", "True"] {type:"raw"}

becomes

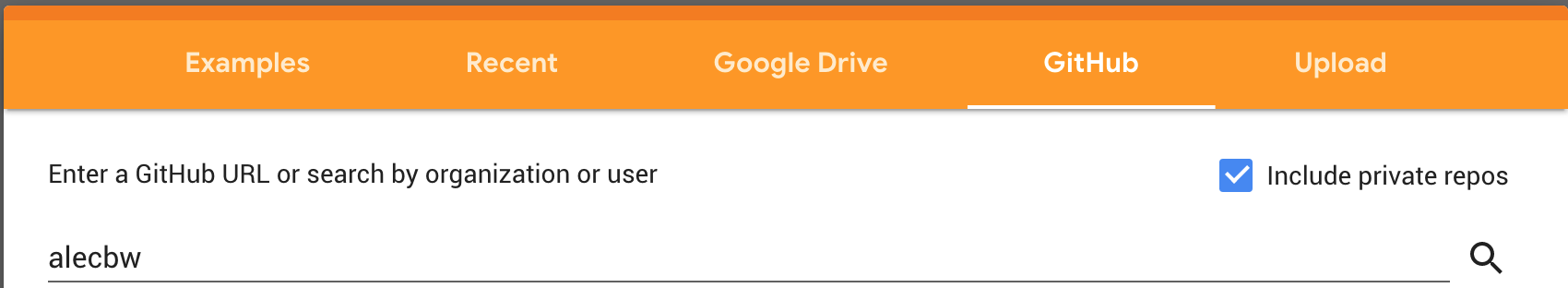

#5 - versioning

Colab has a native versioning system. It's ok. It has diffs and rollbacks, but only stores 30 days of versions. (to use: File -> Revision History).

To be safe, try to back up important Colab notebooks to a Github repo

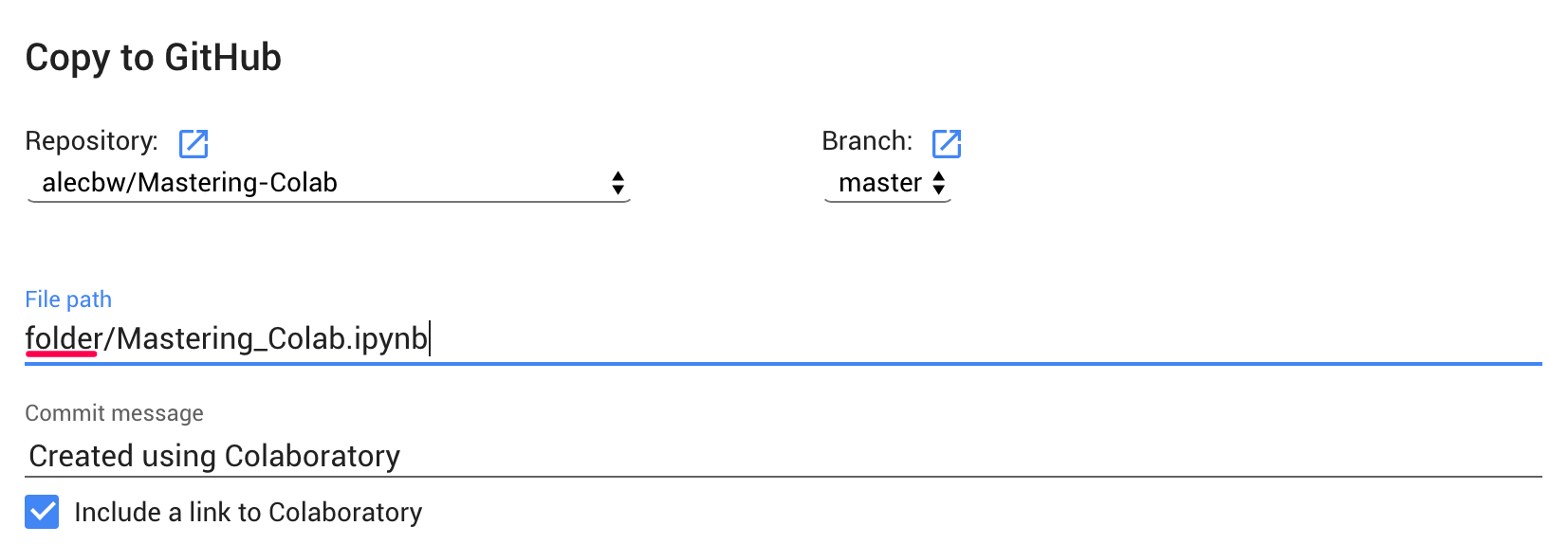

Go to the Colab menu and enable private repos (and go through the OAuth)

You can path the .ipynb into a specific folder of the repo by adding the path ahead of the filename

(make sure the repo has at least 1 commit)

Saving in this method is effectively the same as

git push origin master --force

To see the code in this article in action, visit this Colab Notebook and this Github Repo

Thanks for reading. Questions or comments? 👉🏻 alec@contextify.io